API scraping involves the automated extraction of data from an API (Application Programming Interface) using scraping tools and scripts.

An API is a set of tools and protocols that enable software developers to create applications and services that communicate with other systems. By accessing an API, developers can retrieve specific data and functionalities from an external application or service.

API scraping is used to extract data from an API and convert it into a readable format for an application or service. Scraping tools automate the process of data extraction and processing, making it faster and more efficient.

Types of API Scraping

There are several types of API scraping, each serving different purposes. Here are some of the most common types:

- Web Scraping: Extracting data from websites using scraping tools. This can include scraping data from APIs integrated into websites.

- Social Media Scraping: Extracting data from platforms like Twitter, Facebook, Instagram, and LinkedIn. This can include profile information, posts, and comments.

- News Scraping: Gathering news articles and blog posts from news websites. This can include article titles, authors, publication dates, and content.

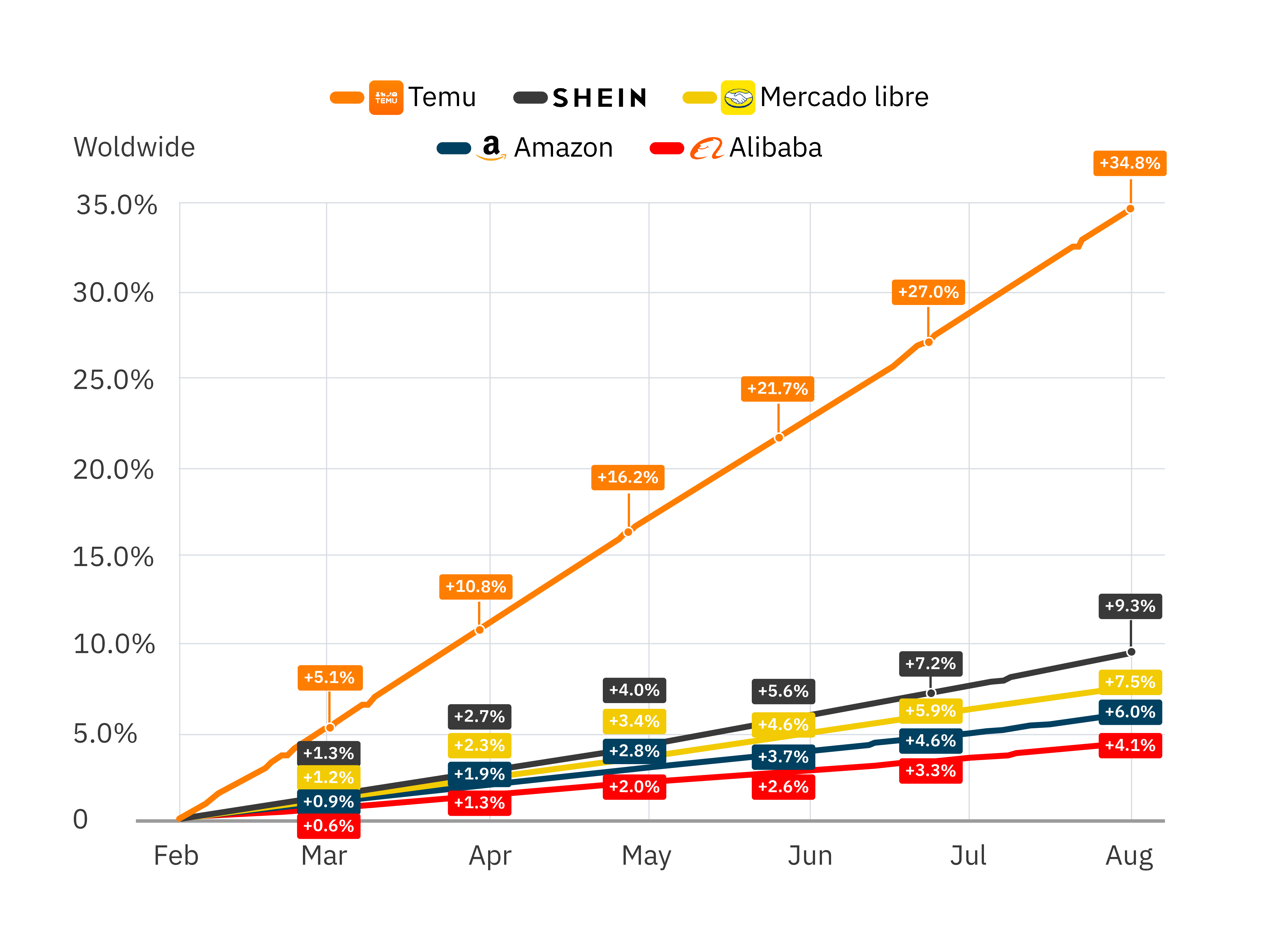

- Price Scraping: Extracting pricing information from e-commerce websites. This can include product names, descriptions, images, and prices.

For more detailed information about specific scraping techniques, check out our post on Strategies to avoid blocking or limitation in web scraping

Why Use API Scraping Tools?

API scraping tools are widely used by companies and organizations to obtain crucial data from external sources. These tools automate and simplify the process, providing structured and actionable data. Here are some common uses:

- Data-Driven Decision Making: Companies use scraped data to make informed business decisions.

- Market Analysis: Organizations analyze market trends using extracted data.

- Software and Service Development: Developers use data to build innovative software solutions.

- Research and Studies: Researchers use scraping tools to collect data for academic and industry analysis.

If you’re looking for robust scraping solutions, explore our Contact us here.

.png)

.png)